top of page

508-577-4556

Capstone Project

Witney: WIT Campus

tour guide robot

For my senior capstone at Wentworth Institute of Technology (WIT), I lead a team of four other engineering students to develop a tour-guide robot. The robot can provide information about the campus, give directions, and notify the people of news and emergencies. It is designed as a sort of attraction and mascot for the campus. This project is a test of our collective skills and knowledge obtained from our education at WIT.

Team:

Bryant Gill, Igor Carvalho, Sabbir Ahmmed, Maxwell Young, Jeffrey Lynch

Witney

This capstone project aims to develop an autonomous robot that will be an information resource for students, guests, and faculty on campus. This robot will also serve as an educational resource for engineering disciplines (i.e. mechanical, electrical, and software engineering) through its open-source platform.

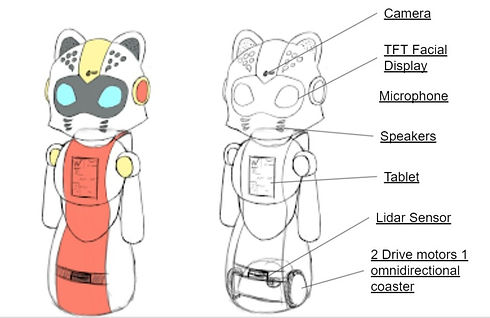

The look of the robot will be in line with the aesthetic of Wentworth’s color scheme and adopts a leopard motif, thus the robot will act as a sort of mascot of the university. Means of delivering quality service to users interacting with the robot will be developed through the designs and methods of social robotics. Human to robot interface will be achieved through speech recognition (similar to a chatbot algorithm), text-to-speech vocalization, facial expressions, and an onboard screen that will provide information through texts and images.

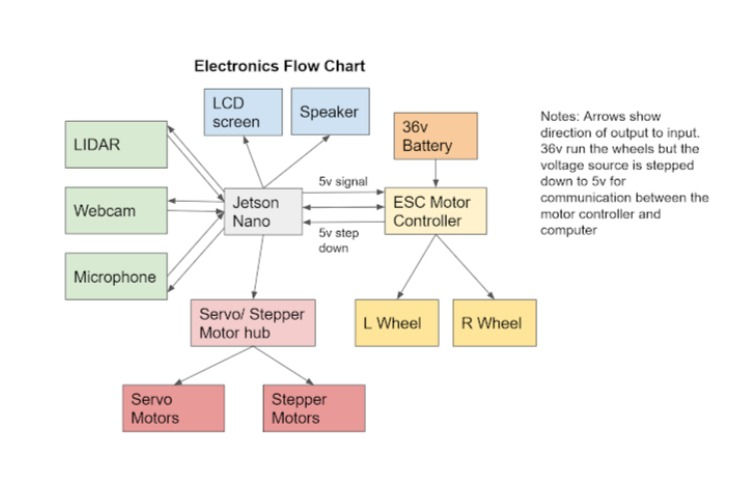

The robot has internet of things (IoT) capabilities and updates with information and current events about campus through an RSS web feed. Autonomous functions are achieved through methods of image processing, GPS navigation, and lidar (Light Detection and Ranging) technology. The image processing and lidar navigation uses a neural network program integrated within the robot’s brain (for this current iteration of the robot we will be using the Nvidia Jetson Nano).

Sensors and Hardware

-

RGB Camera: Detects and identifies objects and personnel.

-

LIDAR Sensor: Detects obstacles within a room and creates a map of the environment.

-

Conference Microphone: Allows for room-scale audio detection. Permits the robot to hear and input data when talked to.

-

LCD Tablet: Relays textual and image-based information.

-

Speakers: Relays audio-based information.

-

HUB Drive Motors: Wheels with the motor built within them.

-

High Torque Servos: Provides articulation for the robot's limbs.

-

GPS Module: Provides GPS data of the robot’s location.

-

Jetson Nano: Linux-based computer that is the brain of the robot.

Design Process: Sketches

As part of the design process, the direction of the robot's aesthetic was decided through sketches.

The goal of the design was to be able to house the sensors and let them be able to function, enable the locomotion of the robot along with articulation of its limbs, and provide a pleasingly friendly look to promote social interaction between the user and robot.

This is my sketch proposal for the look of the robot.

Design Process: CAD and FEA

After finalizing the design direction of the robot, we used a combination of Solidworks and Fusion 360 to create the robot's frame. The internal components contained models of the wheels, batteries, and the tablet used in the robot. The robot's internal structure used 20x20mm aluminum frames. A model of the frame was subjected to FEA (finite element analysis). In the FEA simulation, the frame mounting the wheels is the weakest and most critical portion of the robot is subjected to loading. This section was overloaded with bearing 200lbs. Under these loadings, there was no indication of significant deformations to the robot's frame and retained a satisfactory factor of safety of <2.

Results

Through researching this project we learned a number of things about how to successfully create this robot. After running FEA analysis on the various designs from the team we found that the best way to construct the robot would be using aluminum framing for the skeleton and 3D printing for the body. The aluminum framing gives the robot much more strength as opposed to if the whole thing was 3D printed. The team also decided that we would use a design that combines Igor and my different ideas, utilizing the aluminum framing proposed by Igor and the 3D-printed body from my design. The showcase went exceedingly well with a lot of people coming to see the demonstration of our robot. The demonstration included voice-activated movement and stopping as well as answering questions about the Wentworth makerspace through speech recognition. Ideally, future Wentworth students will pick up the project and expand the robot’s capabilities but the first prototype was a complete success.

Project Links

Link to virtual presentation:

https://www.youtube.com/watch?v=jujsgAfheos&ab_channel=Bgill

PDF to capstone poster:

https://mywentworth.sharepoint.com/sites/CECSDesignShowcase/Shared%20Documents/Forms/AllItems.aspx?id=%2Fsites%2FCECSDesignShowcase%2FShared%20Documents%2FApps%2FMicrosoft%20Forms%2FSOE%20Showcase%20Materials%202021%201%2FPosters%20%28PDF%29%2FMECH5500%5FEl%2DSadi%5FTour%20Guide%20Bot%5F%202021%5FHaifa%20El%2DSadi%2Epdf&parent=%2Fsites%2FCECSDesignShowcase%2FShared%20Documents%2FApps%2FMicrosoft%20Forms%2FSOE%20Showcase%20Materials%202021%201%2FPosters%20%28PDF%29&p=true

bottom of page